Why Meta Creative Testing Has Changed

In early 2025, Meta shifted its optimization core with what it internally calls the Andromeda architecture. A next-generation ad selection engine built around deep learning, creative signal interpretation, and context-aware delivery. This wasn’t a minor adjustment to delivery priorities or interest targeting, it was a fundamental shift in how Meta creative testing works. It was a redefinition of how performance is discovered and scaled on Meta’s platforms.

In the last 10 years, most paid media strategies treated audience targeting as the first principle of performance. Marketers precisely layered interests, exclusions, and lookalikes in pursuit of tightly defined segments. Creative was important, but largely incremental, modifications in copy, design, or product focus on top of fixed audience hypotheses.

So in 2026, Instead of asking:

“Which audience matches this ad best?”

Meta asks:

“Which creative moments perform best in specific situations, signal clear user intent, and drive strong reactions and how can we find more of those moments?”

This fundamental shift explains:

- Why does Meta keep pushing spend into the same few creatives no matter how many variations you upload?

- Why is reach stagnating even though you’re testing new audiences and interests?

- Why does performance break when you try to scale budgets, even on winning ads?

- Why does broad targeting underperform despite following Meta’s recommendations?

Under Andromeda, Meta no longer optimizes around fixed audience segments. Instead, it optimizes around creative resonance – identifying patterns in how ads capture attention, keep users engaged, and influence behavior across billions of real-time interactions, then scaling those patterns wherever they are most likely to perform.

Where targeting once defined who we reached, creative now defines what gets served and where within the algorithm’s prediction ecosystem.

What Happens if You Ignore It

In the Andromeda era, ignoring creative-led optimization creates a compounding disadvantage.

Here’s what happens when teams don’t adapt:

In short: If you don’t evolve your Meta creative testing approach, Meta will still optimize – just not in your favor.

Similar creatives now get no push from the algorithm. If you play by the old rulebook, only one or a few ads will achieve meaningful reach.

What Meta’s Andromeda Algorithm Really Means for Advertisers

Creative signals are now the new targeting

Under Andromeda, Meta didn’t remove targeting, it repositioned it.

Demographics, geolocation, and broad audience definitions still exist, but they no longer drive performance discovery in the way they once did. Instead, Meta uses them as basic boundaries.

The real optimization happens inside the system, where creative signals determine distribution.

In practical terms, Meta now evaluates creatives across multiple dimensions simultaneously:

- Visual structure (format, pacing, framing, scene changes)

- Narrative logic (problem–solution, education, social proof, aspiration)

- Hook mechanics (first 1–3 seconds, pattern interruption, curiosity triggers)

- Emotional tone (authority, relatability, urgency, calm confidence)

- Engagement behavior (hold rate, rewatches, saves, comments, downstream clicks)

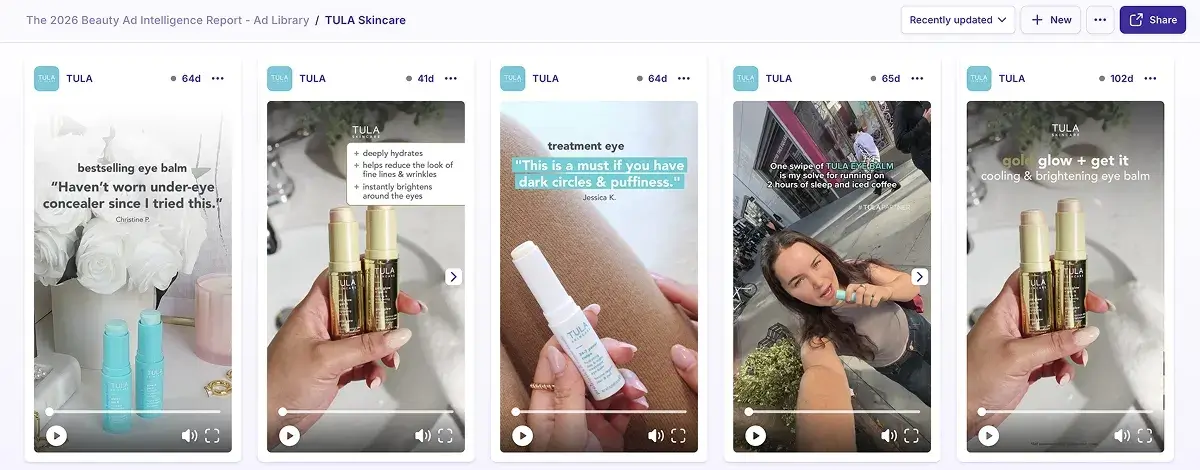

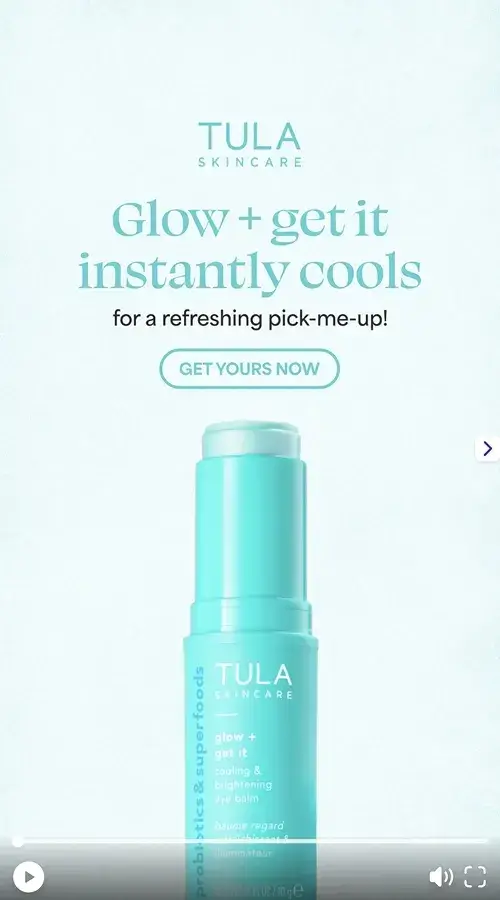

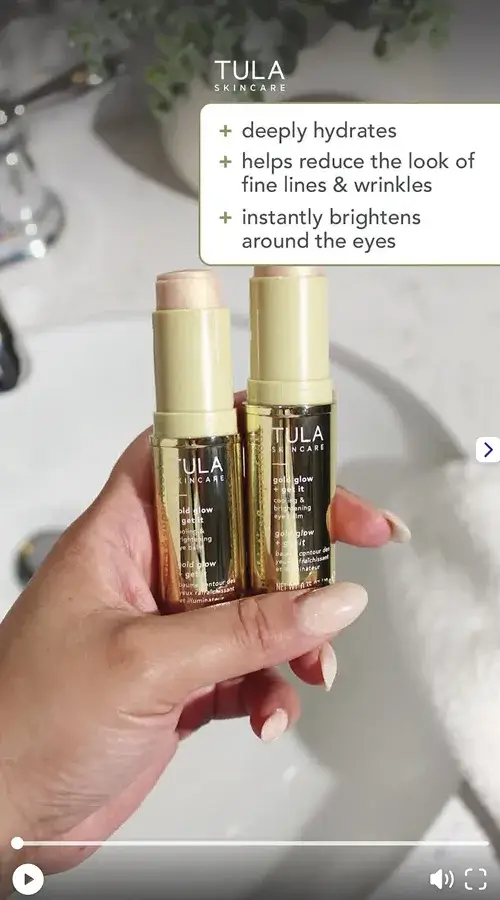

Tula Skincare ads from the ad library of the 2026 Beauty Ad Intelligence Report

These signals are continuously tested against different user contexts: (1) time of day, (2) content, (3) intent clusters, and (4) behavioral patterns.

The algorithm doesn’t need you to tell it who the ad is for. It learns when and why a creative works – meaning in what context it performs best, like the viewer’s mindset and intent, the type of content they’re watching right before and after, the placement (Reels, Stories, Feed), the moment in their day, and whether they’ve interacted with similar brands before and then it looks for more people in similar contexts to show that ad to.

This is why two brands can run the same broad targeting and see radically different results. The advantage no longer comes from audience engineering. It comes from creative signal quality and diversity.

On the left side, you see two (sufficiently) different ads from Tula; on the right side, you see testing versions done the old way.

For advertisers, this means one thing:

If your creative library lacks meaningful variation in message, format, and narrative, Meta has nothing to optimize with, no matter how sophisticated your targeting setup looks.

Key differences between Andromeda and the old system

The easiest way to understand Andromeda is to compare how performance used to be discovered versus how it’s discovered now.

Then:

- Performance was unlocked by audience segmentation

- Creative was optimized within predefined targeting buckets

- Tests focused on incremental variations

- Early results were treated as definitive

- Scaling meant duplicating what already worked

Now:

- Performance is unlocked by creative exploration

- Audiences are fluid, dynamic, and largely algorithm-defined

- Tests require conceptual differentiation, not cosmetic tweaks

- Early results are directional, not final

- Scaling depends on how many distinct creative signals Meta can learn from

In the old system, advertisers tried to control delivery. In the new system, you influence it, through the inputs you give the algorithm.

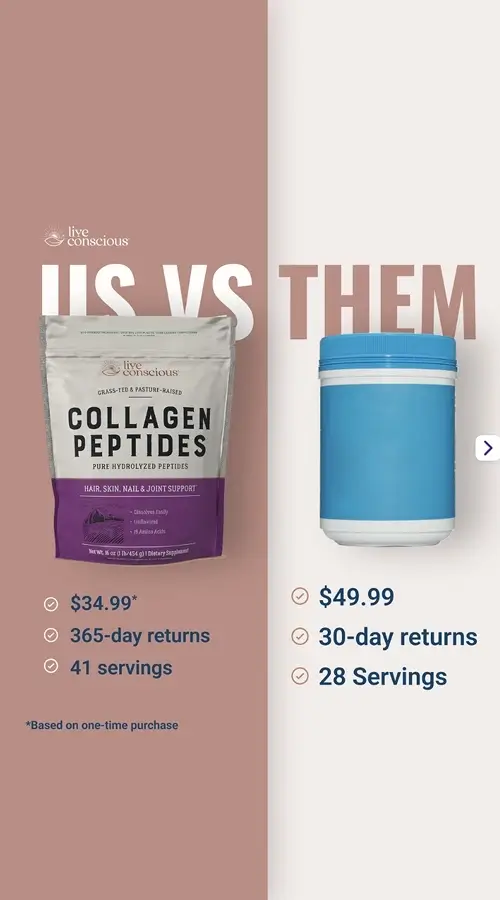

(Different enough) ad creatives (demonstration-single image, value-UGC, demonstration-UGC) from Live Conscious

Three Core Creative Testing Principles in 2026

Creative testing didn’t get more complicated under Andromeda, it got less forgiving.

Meta no longer rewards simply uploading more ads. It rewards intentional diversity, clear signal design, and systems that can sustain exploration over time. The brands that win aren’t the ones producing the most ads, but the ones producing the right kind of variation.

These are the three principles that now define effective Meta creative testing.

Principle 1: Feed the algorithm real variety

Andromeda doesn’t learn from “slightly different.” It learns from meaningfully different.

Real variety means changing at least one core dimension of the ad:

- the story being told

- the problem being framed

- the emotional driver

- the role of the brand in the narrative

For example:

- Education vs. transformation

- Authority-led vs. peer-led

- Functional proof vs. lifestyle aspiration

- Founder POV vs. customer POV

If a human can immediately explain why two ads are different, Meta usually can too. If the difference needs explanation, it’s probably not a new signal.

The Outset founder and UGC video ads (they changed the core dimension)

Principle 2: Design creatives to generate rich signals

Under Andromeda, performance isn’t judged by CTR alone. Meta evaluates creatives based on how much behavioral information they generate. The more signals an ad produces, the easier it is for the algorithm to understand where and when it works best.

High-signal creatives tend to:

- hold attention longer

- provoke reactions (comments, saves, shares)

- invite exploration rather than force conversion

- perform across multiple placements and contexts

The goal of testing is not just to find winners, it’s to train the algorithm by showing it which messages, formats, emotions, and contexts work for your brand, so it can predict performance more accurately.

Osea Malibu ads from our beauty ad research

Principle 3: Test concepts, not ads

This is the most important shift and the one most teams struggle with. In 2026, ads are execution units. Concepts are the real test variables.

Meta learns faster when multiple ads reinforce the same signal from different angles. One concept should be expressed through multiple executions, like different formats (UGC, single image, motion, carousel), different lengths, different hooks that serve the same idea.

Stop chasing isolated winners and start building repeatable creative frameworks.

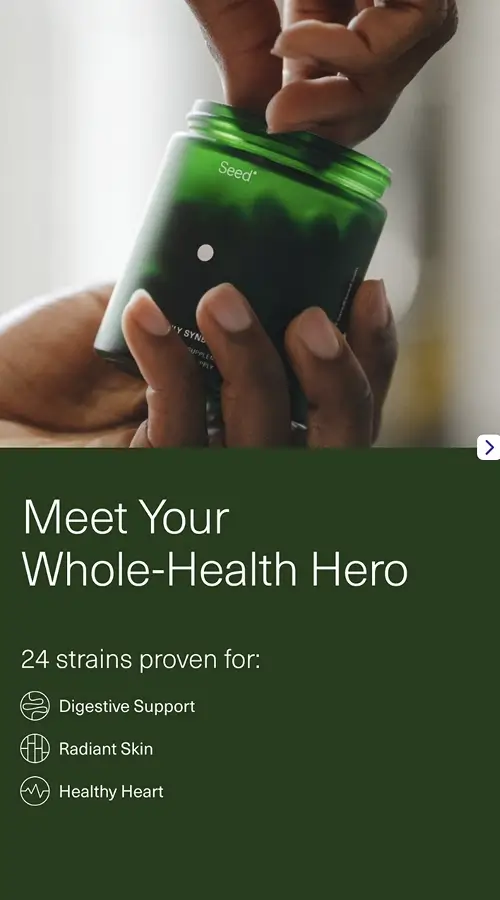

Seed ads from our supplement ad intelligence report

The creative framework for Meta in the Andromeda era

In a world where Meta’s algorithm now prefers rich creative signals over narrow audience targeting, brands need a creative system. That’s where Evolut’s trademarked OMNIPRESENCE™ strategy comes in: a predictable, strategic, and scalable content production and creative testing system built to generate the kinds of signals Meta’s Andromeda architecture thrives on.

What OMNIPRESENCE™ actually is:

At its core, this strategy is a content production and testing system, not just a strategy. It’s designed to:

- sustain high-signal creative delivery across paid and organic channels

- promote diversity in message, format, and narrative logic

- maintain consistent visibility across user journeys & platforms

- reduce reliance on short bursts of performance creatives

- build trust and preference before conversion

Below are real creatives we’ve produced using Evolut’s OMNIPRESENCE™ method, which structures output into content layers, each serving distinct purposes.

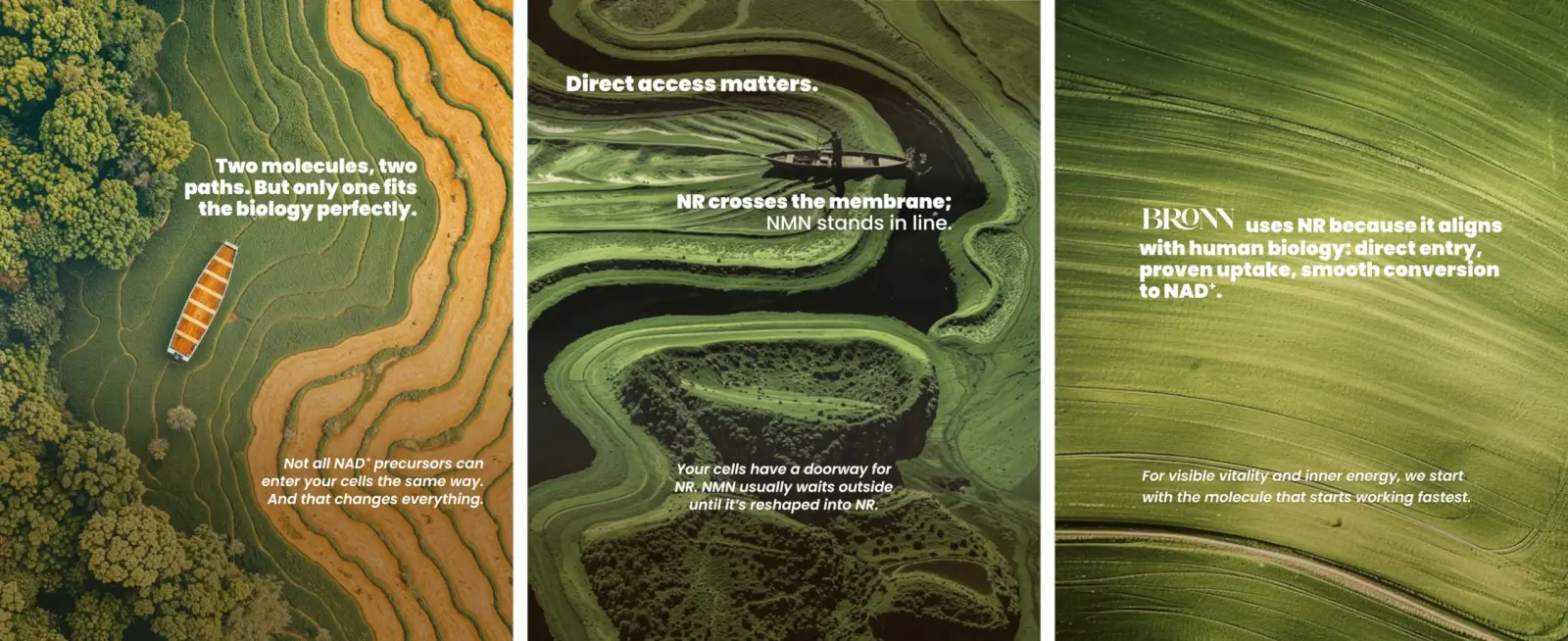

1) Value & Education Content

Purpose: Teach, enlighten, build trust.

Signal: High engagement and cognitive investment.

2) Demonstration & Proof Content

Purpose: Show product value in action.

Signal: Comprehension + conversion intent.

Demonstration creatives from Solanie Professional Cosmetics (HU) and Ingenious (UK).

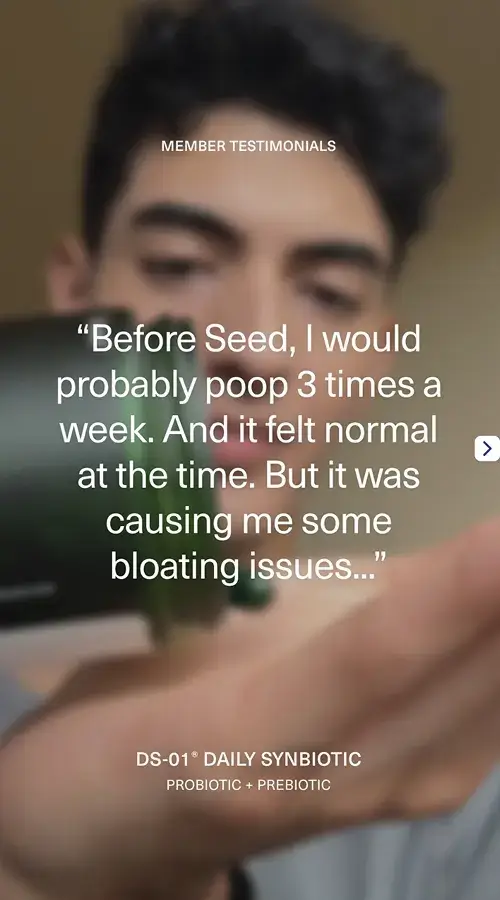

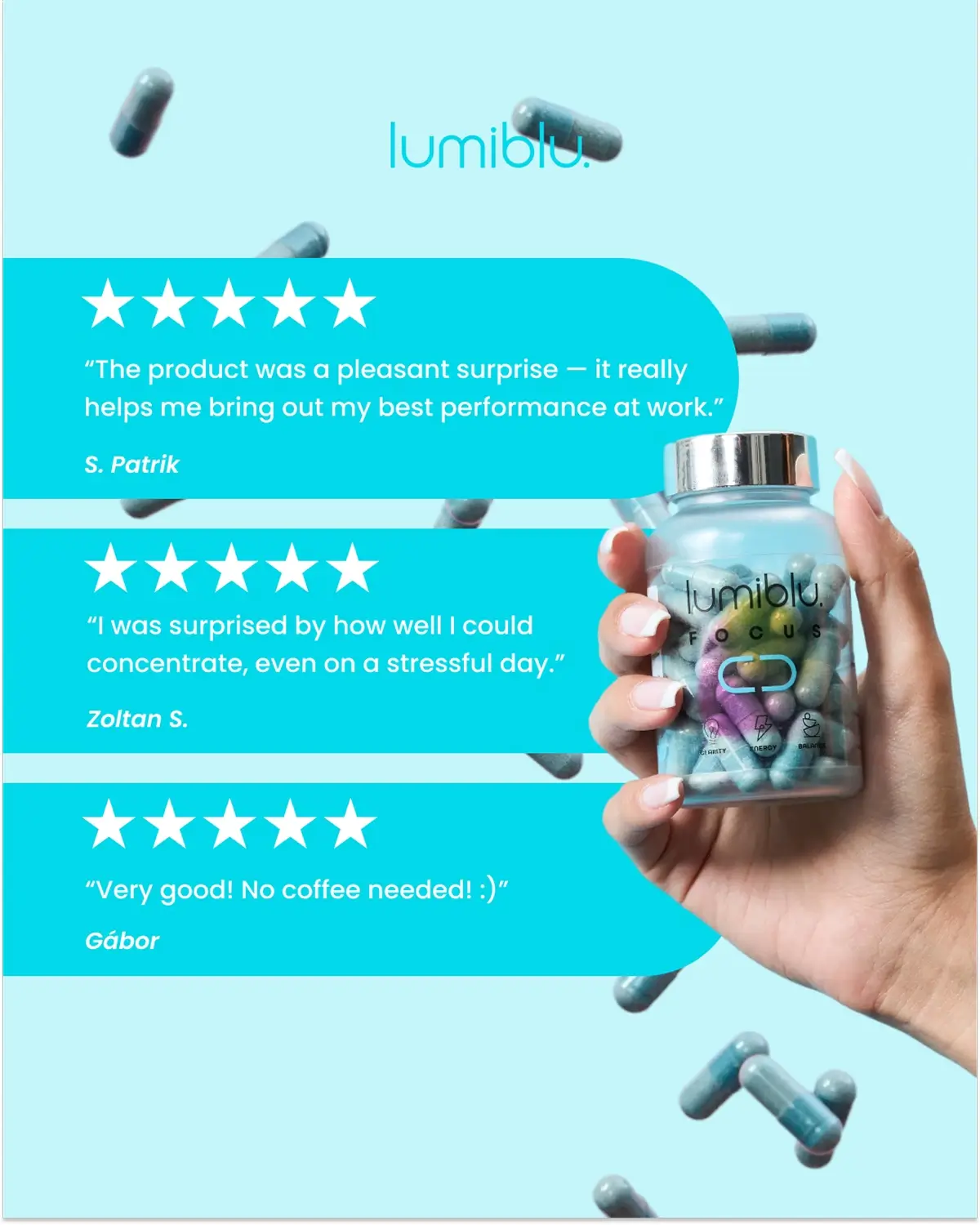

3) Social Proof & Testimonial Content

Purpose: Anchor credibility and reduce risk.

Signal: Emotional resonance and trust cues.

Social proof creatives from Triple Solution Skincare (CH) and Lumiblu (HU).

4) Lifestyle & Aspirational Content

Purpose: Increase brand identity and relatability.

Signal: Brand affinity & long-term memory.

Lifestyle creatives from Solanie (HU) and GOLD Professional (DK).

5) Call-to-Action Content

Purpose: Convert interest into action.

Signal: Direct response and outcome metrics.

CTA creatives from Cellaro (SE), Ingenious (UK) and Mai Nami (UK).

This layered architecture ensures that your creative strategy isn’t focused only on conversion ads, but instead on feeding Meta with a balanced stream of signals from awareness to action.

Organizational Impact

Why Meta creative testing is no longer a media problem, but an operating model decision

The Andromeda shift didn’t just change how Meta delivers ads. It changed how organizations need to work to stay competitive.

Brands that still treat creative testing as a later-stage task (something the media buyer “handles” after assets are delivered) are structurally misaligned with how Meta now learns and scales.

In 2026, Meta creative testing is an organizational capability, not a tactic.

Under the old model, teams could afford the separation of strategy, creative production and media delivery. That workflow breaks under Andromeda. Today, performance depends on how well these functions collaborate around signal design.

- creatives must be developed with algorithmic learning in mind

- media buyers must understand creative intent, not just budgets

- strategists must think in systems, not campaigns

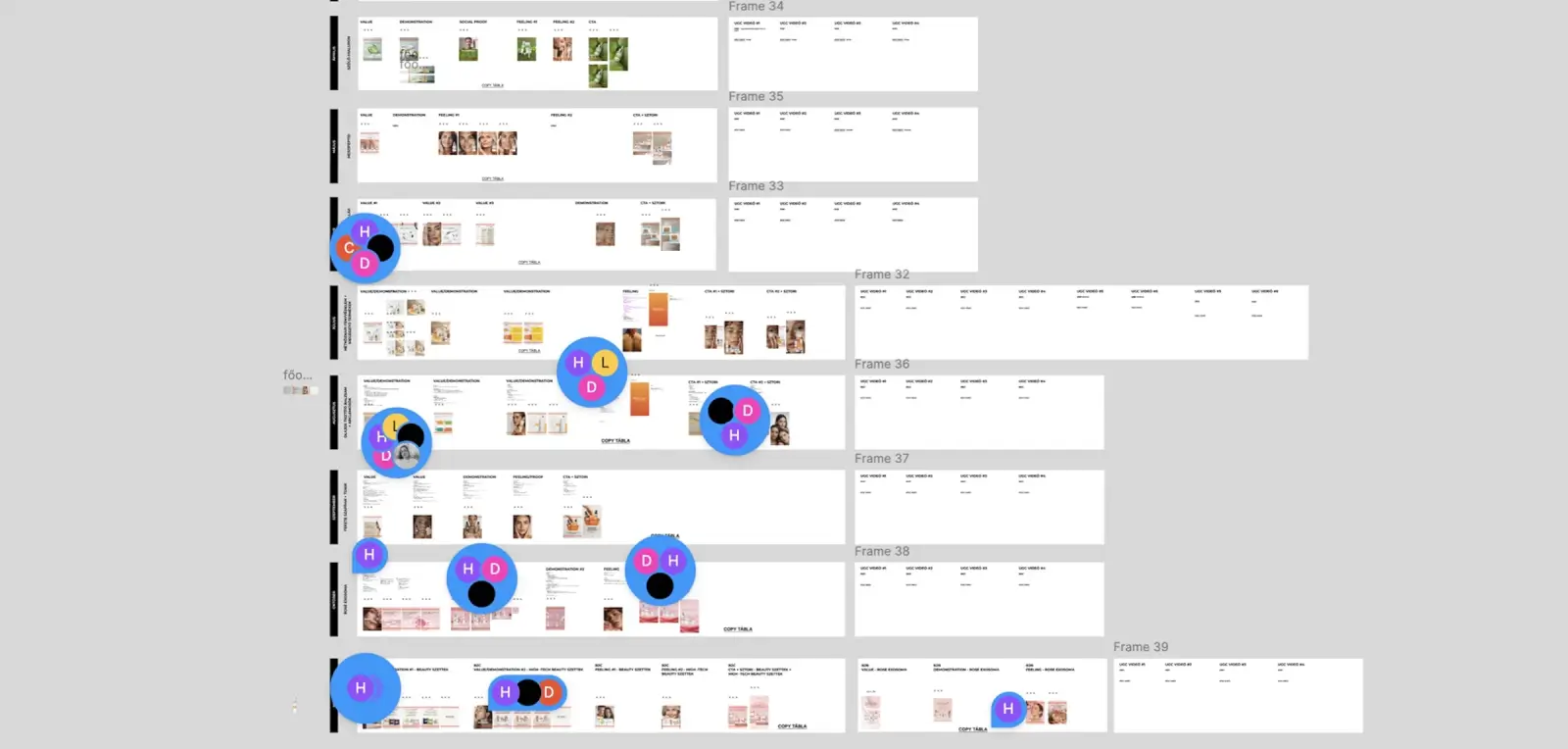

A snapshot of our working system in Figma broke down into OMNI creatives, UGC videos and separate supporting sales creatives

Media teams shift from optimization to interpretation. Media buyers used to “find winners.” Now, their real value lies in reading signals. They will spend more time analyzing performance data and understanding where and in which contexts the algorithm delivers their creatives, rather than focusing on manual targeting setup.

This is exactly why Evolut developed and owns the OMNIPRESENCE™ method, not as a content idea, but as an operating model for modern performance marketing.

Common Mistakes and How to Avoid Them

Most performance issues we see on Meta in 2026 aren’t caused by “bad creatives” or “rising competition.” They’re caused by teams applying old logic to a new system.

Below are the most common mistakes brands make in Meta creative testing today and what to do instead.

Mistake #1: Confusing variation with diversity

What most teams do:

They launch multiple “new” creatives that look different on the surface but are fundamentally the same – the same message, the same structure, the same story, just slightly reworded or visually adjusted. From an internal perspective, this feels like active testing and iteration.

Why it fails:

Under Andromeda, Meta recognizes these assets as a single creative signal. Minor tweaks don’t create new learning, so the algorithm has nothing new to explore. As a result, delivery concentrates, performance plateaus, and teams mistakenly assume they need even more variations, when what they actually need is true creative diversity at the idea and narrative level.

Mistake #2: Killing creatives too early

What most teams do:

They judge a new creative in the first 48-72 hours, pause it after a few bad metrics, and move on quickly, especially if early ROAS or CPA doesn’t match existing winners. The decision is made at the ad level, based on a short snapshot of delivery.

Why it fails:

In the Andromeda era, early delivery is often exploratory. Meta is still learning where the creative fits best, which placements, contexts, and user intent patterns it resonates with. When you kill ads too early, you interrupt that learning process, often cutting off creatives that could have become scalable winners once the algorithm found the right distribution context.

Mistake #3: Over-segmenting campaigns

What most teams do:

They split campaigns into multiple ad sets based on interests, behaviors, or small audience definitions, often duplicating the same creatives across each segment. Budgets are fragmented, and each ad set is expected to “prove” performance on its own.

Why it fails:

Over-segmentation restricts Meta’s ability to learn. By narrowing the delivery environment, you limit how the algorithm can test creatives across different contexts and intent signals. Instead of helping performance, excessive structure slows learning, reduces reach, and prevents Meta from discovering where a creative could work best at scale.

Mistake #4: Treating ads as isolated experiments

What most teams do:

They evaluate each ad on its own, asking whether it “won” or “lost,” and then move on to the next test. Learnings stay tied to individual executions, and once an ad is paused, the insight often disappears with it.

Why it fails:

Single ads are noisy and context-dependent. Under Andromeda, Meta learns far more from repeated signals around the same idea than from one-off executions. When ads aren’t connected through shared concepts, the algorithm struggles to build momentum, and teams miss the opportunity to turn insights into scalable creative frameworks.

Mistake #5: Optimizing only for short-term ROAS

What most teams do:

They optimize aggressively toward immediate return, pausing creatives that don’t hit ROAS targets quickly and prioritizing only hard-selling, conversion-focused ads. Engagement, education, and mid-funnel creatives are often seen as expendable in such Meta creative testing.

Why it fails:

Meta’s algorithm learns from behavior, not just purchases. When you remove signal-rich creatives too early, you limit the system’s ability to understand intent, context, and future conversion potential. Over time, this narrows learning, weakens scaling, and makes performance increasingly fragile – even if short-term ROAS looks acceptable.

Conclusion

The Andromeda era didn’t make Meta creative testing harder, it made it more honest. The algorithm now rewards brands that understand how performance is discovered, not just optimized; that invest in creative as a system rather than a last-mile asset; and that feed Meta with diversity, depth, and consistency instead of shortcuts.

Audience hacks, micro-optimizations, and short-term wins still exist, but they no longer compound. What compounds now is signal quality and signals are born from creative strategy.

This is the real shift behind modern Meta creative testing: you’re no longer testing ads to find winners, you’re testing ideas, narratives, and belief systems to teach the algorithm how your brand should scale.

That’s exactly why Evolut built and owns the OMNIPRESENCE™ method.